Meet the Artist Challenging Human Bias in the Age of Artificial Intelligence

Artist Stephanie Dinkins investigates the way culture, race and gender are programmed into technology. Culture Trip speaks to her about the coming age of artificial intelligence, teaching robots yo momma jokes and how we can avoid codifying human prejudice into the systems shaping our future.

Culture Trip (CT): How would you describe the work that you do?

Stephanie Dinkins (SD): I look into artificial intelligence (AI) through a community lens – particularly communities of colour – and investigate what it means for the world to be shifting towards an artificially intelligent era, but not having those people participating in the creation of these systems.

AI systems are impacting things from medicine and educational testing to the criminal justice system. In the US, judges are using algorithms to help them to decide who is going to commit more crime and how much time they should get. Even on a more basic level, what does it mean if, for example, the police want to listen in on what’s been said in your house through your Google Home account?

For the most part, people of colour are not at the table when [AI systems] are getting made. That’s a really scary prospect because such large and impactful questions are being put to these systems and they’re being used to aid in making decisions about really big issues at big points of inflection in people’s lives. If communities of colour are not participating [in creating AI] in high enough numbers, we can’t make the system fair and equitable and really good for everybody who’s going to be touched by it.

CT: How does your background as an artist help you to communicate these issues?

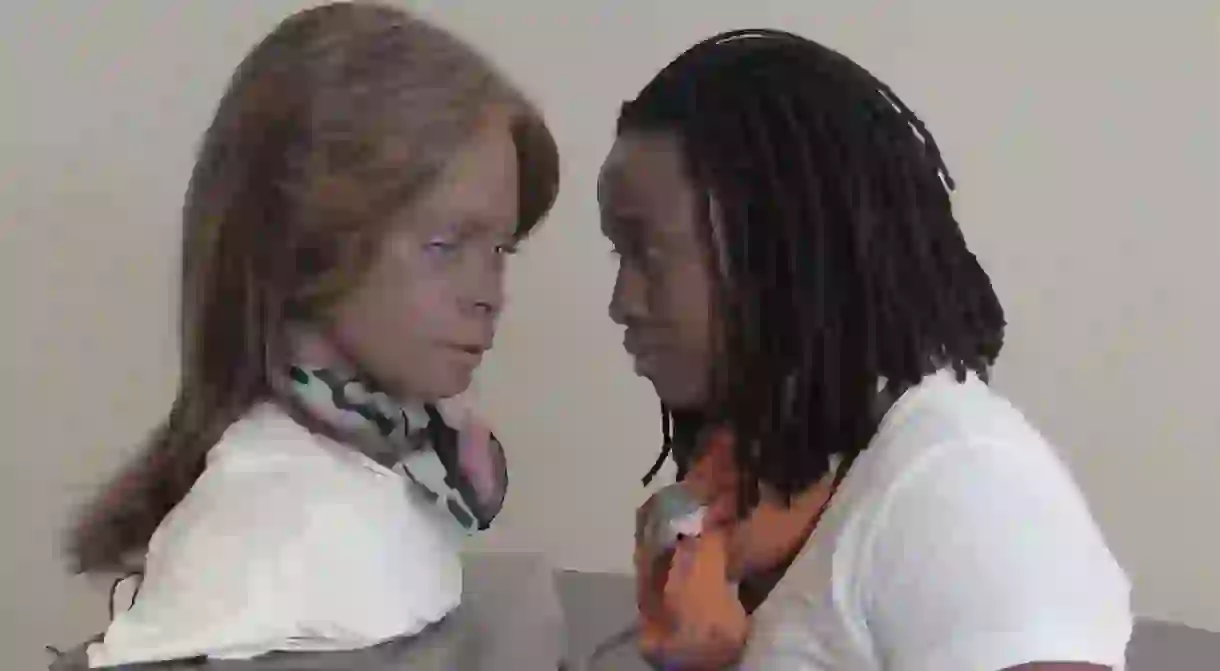

SD: I call myself an artist-citizen. I stumbled across Bina48 [the only humanoid robot that presents as a black female] and started questioning what these systems are and who’s making them. It just dawned on me that this is something that needs to be brought to the forefront and that I could use my role as an artist to act as a bridge between different communities, including the community that I live in and some of the top AI researchers in the world and the people who are funding [the development of AI systems and] who are able to change the tide of what we’re looking at as a society through that funding.

It’s a really privileged position to bring the community that I care so much about into that space and make sure they’re thinking about what the ethics of AI and algorithms are. And [to do so] not only from a big theoretical space, but with practical, on-the-ground, lived experiences of people who are being touched by these [algorithms] and might not even know it yet.

CT: What are you working on now?

SD: I’m creating something I’m calling an entity – because I don’t know what form it’s going to take or if I want it to be an embodied robot – that will be able to talk to people and tell the story of the lived experiences of three generations of my family, spanning about 100 years from the Great Migration [the movement of six million African Americans out of the rural Southern United States to the urban Northeast, Midwest and West that occurred between 1916 and 1970] to 9/11 and beyond.

It’s two direct generations: my aunt, who has functioned as my mother for a very long time and is about 30 years older than I am, and my niece, who is 30 years younger than I am. I’m the middle generation, again playing that bridge role, between the older generation and those going forward.

You train AI on information, so we’re putting interviews that I’ve done with my family into an AI system, or a neural network, and augmenting it with histories of places that we’ve touched, histories of Google search and other different entries.

Right now, the entity can talk and say the basics. We’ll have to see what happens, but right now it says “happy” and “love” a lot because my family is all about love and caring and taking care of each other – and so the AI expresses itself in terms of love. For some reason it also keeps talking about being a movie, which is interesting because I don’t know where it got that.

CT: Drawing attention to issues with art feels like the first step in a long road towards building an inclusive future. What do you think are the next steps?

SD: Understanding how the system works, even to a small extent, allows you to stand up to it more.

I’ve been doing workshops where I’m getting groups to look at the back end of these AI and chatbot systems so they can see what’s going into the systems and what comes out and how people are impacting what comes out of these systems.

I did a workshop with a group of kids using Dialogflow, an online system for making interactive chatbots, and we talk a lot about culture and what it means to have your own language come out of a system like this and why that would be different than just hearing Siri talk. A group of girls I was working with put in yo momma jokes, which I thought was great, because it showed them that what you put into the system is what comes out and [illustrated how] AI becomes a reflection of you.

There really needs to be way more cross-pollination [between AI developers and diverse communities]. There are a lot of initiatives where corporations are trying to pull [more diverse] people in to do more programming, but we also need to train folks about what these systems are, how they’re working and what they mean. At the very least, we need to start educating technologists more broadly, with the humanities, so that things like creativity are a part of the way that they think. If we don’t have diversity [of thought and identity], it becomes super scary.

CT: How optimistic are you about our future with technology?

SD: I’m an optimist at heart. For me that means trying to work with technology, thinking of technology as a partnership, rather than an overlord.

Technology has gotten to the point where soon it will be programming itself. The question is how do we then work with it well, how do we inform it well and how do we inform it equitably so it’s not just based on all the histories that are full of bias already, but rather a rounder vision of who we are. If we keep putting the same things in, we are going to get the same things out. And there’s a lot of work to be done to do that, but I feel you have to try.

For more on the AI-driven future, read about how big data is changing daily life across America.